Motivation and Findings

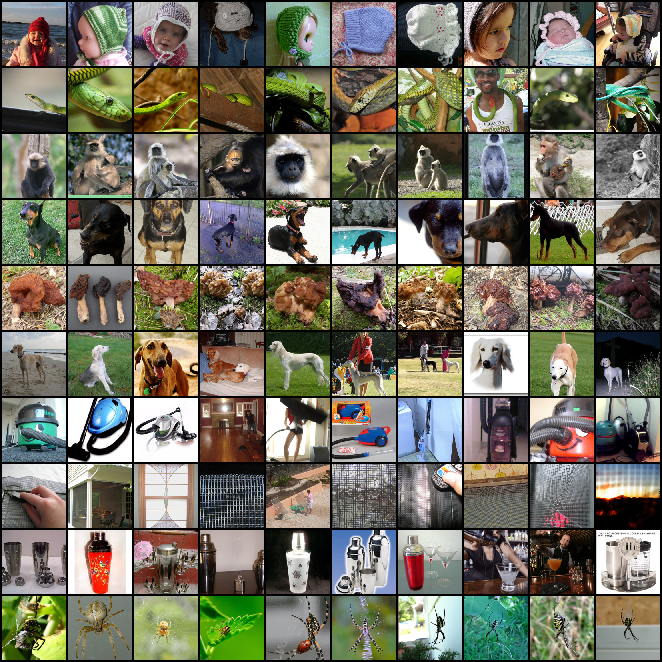

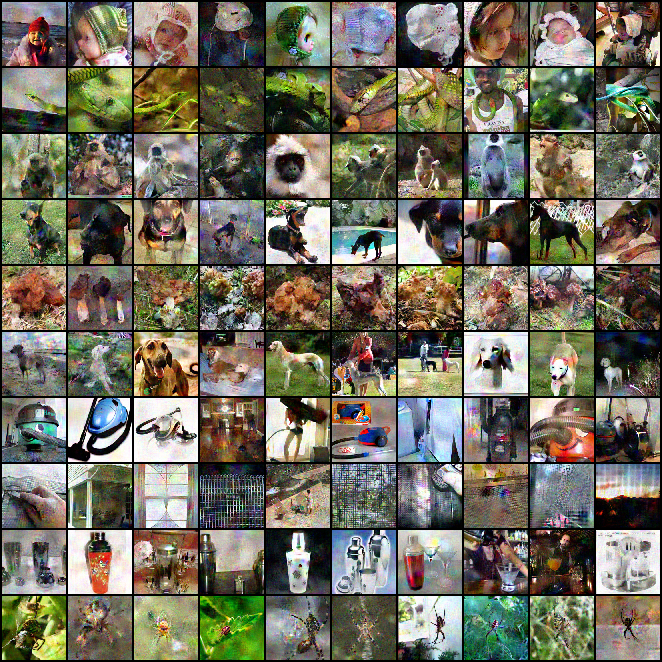

Previous DM-based methods in Dataset Condensation/Distillation align the first-order moment of real and synthetic sets, i.e., minimize the MSE distance between the mean of feature representations. But does the aligned first-order moments lead to perfectly aligned distributions? We illustrate the misalignment issue in higher-order moments below:

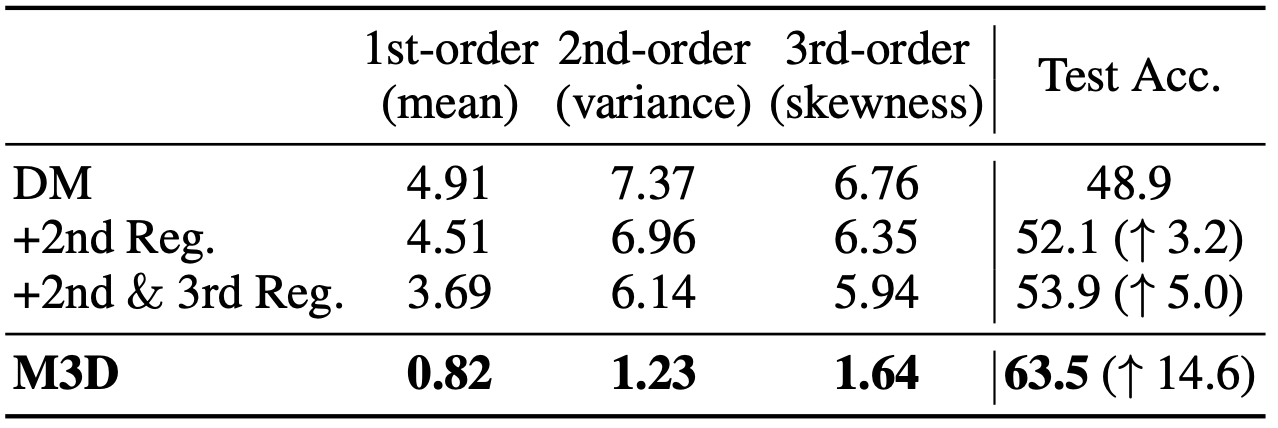

To align higher-order moments, a naive way is to add higher-order regularization terms to the original loss of DM. As shown in the following table, by adding 2nd and 3rd order regularization, the performance of synthetic data is greatly improved.

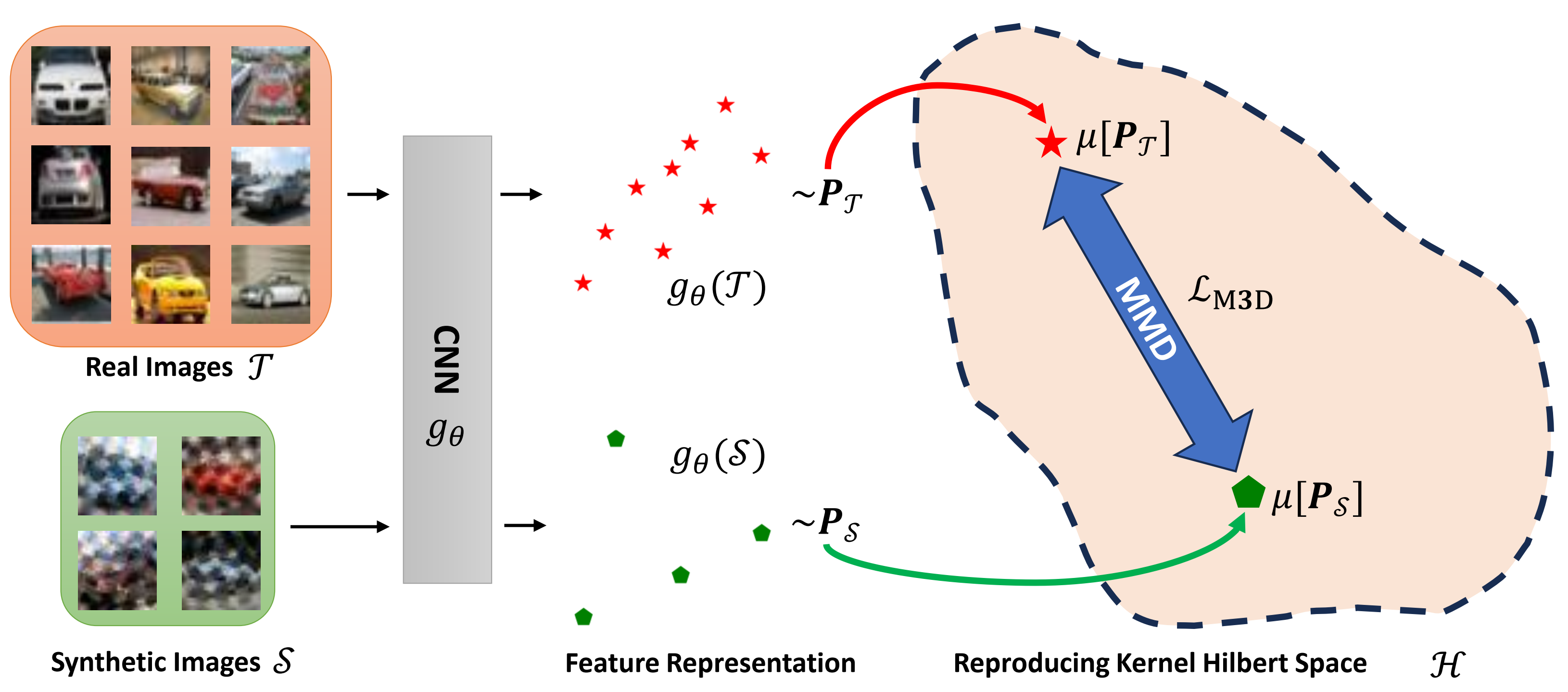

However, it is impractical for us to add infinite regularization terms to ensure the alignment of each order moments. Luckily, the reproducing kernel Hilbert space can embed infinite order moments into a finite kernel function form, through which we can readily calculate the distance between the distribution of real and synthetic set, leading to a more distribution-aligned synthetic set. The framework of our method is as following: